Challenge

An automatic detection and observation of persons by technical systems can be used to identify potentially dangerous situations, in order to provide an appropriate reaction. This is an essential function, e.g., for driver assistance systems, for autonomous driving but also for automatic monitoring of an environment. Various types of sensors are promising candidates for such tasks. LiDAR sensors have the advantage of directly delivering 3D environmental and object characteristics. This enables the separation of foreground and background and provides an accurate location of detected objects. LiDAR sensors are able to capture the environment fast enough to be able to recognize people in the data in real-time. However, compared to cameras, they provide a relatively low local point density: While a standard camera delivers several million pixels in a specific field of view, a typical 360° laser scanner generates point clouds, e.g. 100,000 points per revolution (i.e., per 360° scan). The challenge is to benefit from the advantages of LiDAR sensors despite the low data density.

Solution concepts

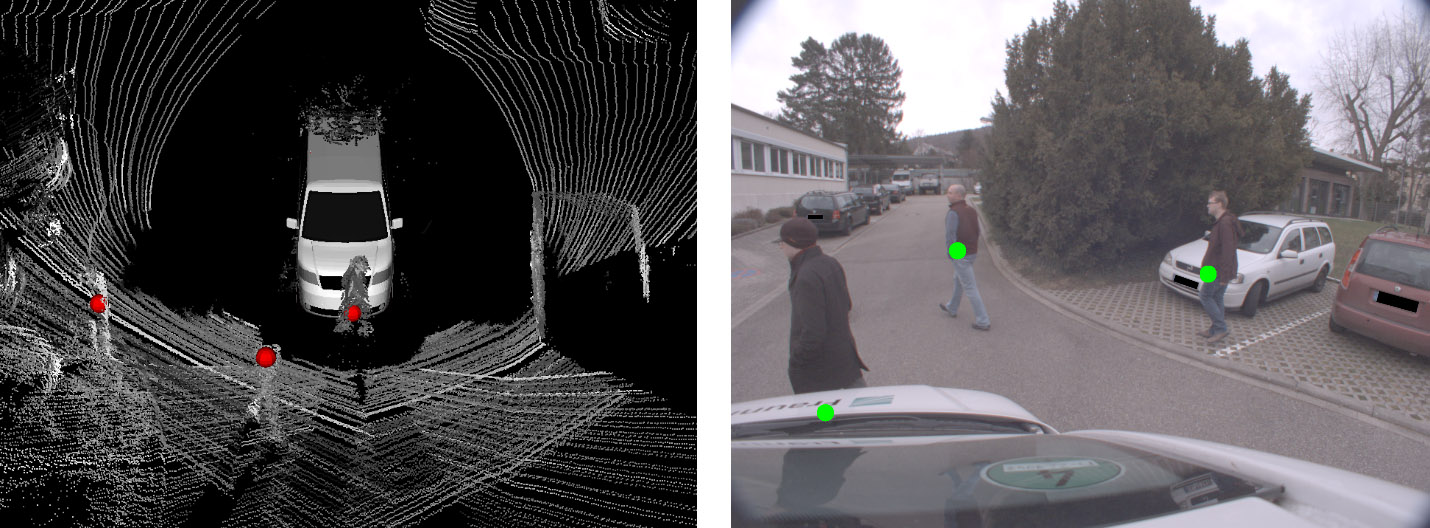

Within the scope of these applications, using a multi-sensor system can combine the advantages of individual sensors and compensate for any disadvantages they may have. A system demonstrator for vehicle-based person detection is currently being developed at IOSB, in which 3D LiDAR data are evaluated as the primary information source, if necessary supplemented by additional sensors such as cameras. First, 3D data are collected and evaluated for the entire vehicle environment, whereby the methodology consists of the use of a vote-based procedure. In this method, after a feature extraction, possible positions of persons are accumulated based on a previously trained dictionary with geometric words (bag-of-words or implicit-shape model). Accumulation points are then identified in the resulting voting space, each of which stands for a detected person.

Figure 1: Person detection in 3D point clouds. Left: Processed point clouds of two sensors and detection results. Right: The same detections transferred to a camera image of the sensor system.

In addition to the detection of persons, the tracking of detected persons is useful for the mentioned applications. This makes it possible to derive information about the movement behavior of persons. This information can indicate possible hazardous situations. In addition, a tracking procedure can extrapolate future positions of persons, so that these predictions can be integrated into the voting space of the person detection as additional votes in order to improve the detection performance. This is essential, for example, if a person temporarily leaves the field of view of the sensors.

Another source of information that allows analyzing the behavior of persons is their pose, i.e. the relative positions of individual body parts. By recognizing the face, it is also possible to draw conclusions about the viewing direction of a person. This is where 360° scanning LiDAR sensors reach their limits: The low data density makes it difficult to recognize smaller parts of the body and the data is hardly suitable for facial recognition due to the low resolution. Cameras and especially infrared cameras are a useful supplement. They help in the detection of body parts and enable facial recognition. Two approaches are conceivable: On the one hand, cameras can be pointed specifically at detected persons, or only the parts of an image can be analyzed that correspond to the direction in which a person has been detected. On the other hand, the cameras can run their own detection processes parallel to the LiDAR sensors. Their results can then be transferred as vectors according to the imaging geometry into the voting space of the LiDAR-based detection procedures.

The actual detection of hazards will be subject of future work and will be based on the measurements of all components. In addition to imaging sensors, the sensor system also includes sensors for the determination of its own motion (IMU/GNSS). The own trajectory, supplemented by the determined direction of movement of a person, can provide indications as to whether a collision with the person is to be expected. This risk assessment can become even more conclusive if, for example, conclusions can be drawn about a person's attention from the viewing direction or viewing behavior (e.g. looking around before crossing the road).

Publications

- Borgmann, B., Hebel, M., Arens, M., Stilla, U., 2020. Pedestrian detection and tracking in sparse MLS point clouds using a neural network and voting-based approach. ISPRS Annals of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Volume V-2-2020, pp. 187-194. [doi: 10.5194/isprs-annals-V-2-2020-187-2020]

- Borgmann, B., Hebel, M., Arens, M., Stilla, U., 2017. Detection of persons in MLS point clouds using implicit shape models. LS2017: ISPRS Workshop Laser Scanning 2017. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci., XLII-2-W7, pp. 203-210. [doi: 10.5194/isprs-archives-XLII-2-W7-203-2017]

Fraunhofer Institute of Optronics, System Technologies and Image Exploitation IOSB

Fraunhofer Institute of Optronics, System Technologies and Image Exploitation IOSB